The political consultant faces a $6 million fine.

Others are reading now

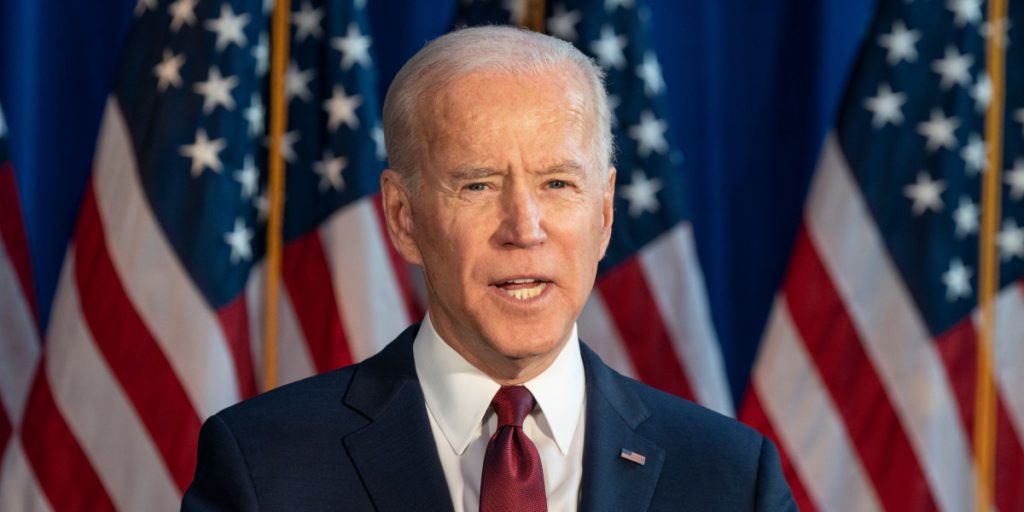

The US Federal Communications Commission (FCC) is seeking to impose a hefty $6 million fine on a political consultant responsible for creating and distributing a fake election campaign call that used a convincingly real-sounding voice of President Joe Biden.

The automated call, which targeted voters in New Hampshire, urged them to abstain from participating in the Democratic Party’s primary election.

Details of the Incident

The so-called robocall, which went out in January, falsely appeared to be sent by a Democratic political committee due to falsified sender information. This tactic is a common election campaign tool in the US, but the deceptive nature of this particular call has led to significant repercussions.

The FCC is also planning to fine a telecommunications provider involved in the distribution of these calls $2 million.

Also read

The total fines, however, have not yet been finalized, as the accused parties will first be given an opportunity to respond to the allegations.

AI and Election Integrity

This incident has raised serious concerns about the potential misuse of artificial intelligence (AI) in political campaigns. AI software can now be trained to replicate specific voices using audio recordings, making it possible to generate realistic-sounding fake messages.

The Biden robocall has showcased the risks of AI being abused to influence voters, particularly with the upcoming presidential election in November.

In response to this incident and the broader threat it represents, the US government has strengthened its legal framework to combat AI-generated fakes.

The FCC has stipulated that AI-generated calls require the prior consent of the recipient and that the originators of such calls must be clearly identified. This ruling ensures that the same regulations apply to automated AI calls as to those using pre-recorded or artificial voices.