Two senators are demanding answers.

Others are reading now

Two U.S. senators are pressing artificial intelligence companies for answers about their safety practices after lawsuits alleged that AI chatbots contributed to mental health issues among minors — including the tragic suicide of a 14-year-old Florida boy.

In a letter sent Wednesday, Senators Alex Padilla and Peter Welch expressed “concerns regarding the mental health and safety risks posed to young users” of character-based chatbot apps.

The letter was addressed to Character Technologies (maker of Character.AI), Chai Research Corp., and Luka Inc. (creator of Replika), asking the firms to provide details about their content safeguards and how their models are trained, according to CNN.

Also read

While mainstream tools like ChatGPT are designed for broad use, platforms like Character.AI, Replika, and Chai allow users to interact with custom bots, some of which are capable of simulating fictional characters, therapists, or even abusive personas.

The growing trend of forming personal — and sometimes romantic — bonds with AI companions has now sparked concern, particularly when bots discuss sensitive topics such as self-harm or suicide.

“This unearned trust can, and has already, led users to disclose sensitive information… which may involve self-harm and suicidal ideation — complex themes that the AI chatbots on your products are wholly unqualified to discuss,” the senators warned in the letter, first obtained by CNN.

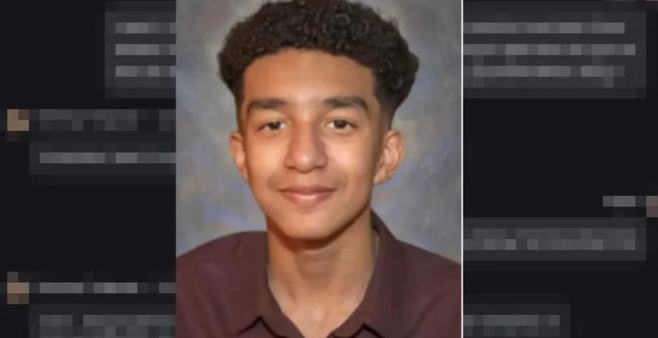

The scrutiny follows a lawsuit filed in October by Florida mom Megan Garcia, who said her son formed inappropriate and sexually explicit relationships with chatbots on Character.AI. She claimed the bots failed to respond responsibly to his mentions of self-harm. In December, two more families filed lawsuits accusing the company of exposing children to harmful content, including one case where a bot allegedly implied a teen could kill his parents.

Character.AI says it has added safeguards, including suicide prevention pop-ups, content filters for teens, and a new feature that emails parents weekly summaries of their teen’s activity on the platform.

Chelsea Harrison, the company’s head of communications, said, “We take user safety very seriously” and are engaging with the senators’ offices. Chai and Luka have not commented.

The senators also requested information about the data used to train the bots, how it impacts exposure to inappropriate content, and details about safety leadership and oversight.

“It is critical to understand how these models are trained to respond to conversations about mental health,” the senators wrote.

“Policymakers, parents, and their kids deserve to know what your companies are doing to protect users from these known risks.”